CVE-2024-34359 (GCVE-0-2024-34359)

Vulnerability from cvelistv5 – Published: 2024-05-10 17:07 – Updated: 2024-08-02 02:51

VLAI?

Title

llama-cpp-python vulnerable to Remote Code Execution by Server-Side Template Injection in Model Metadata

Summary

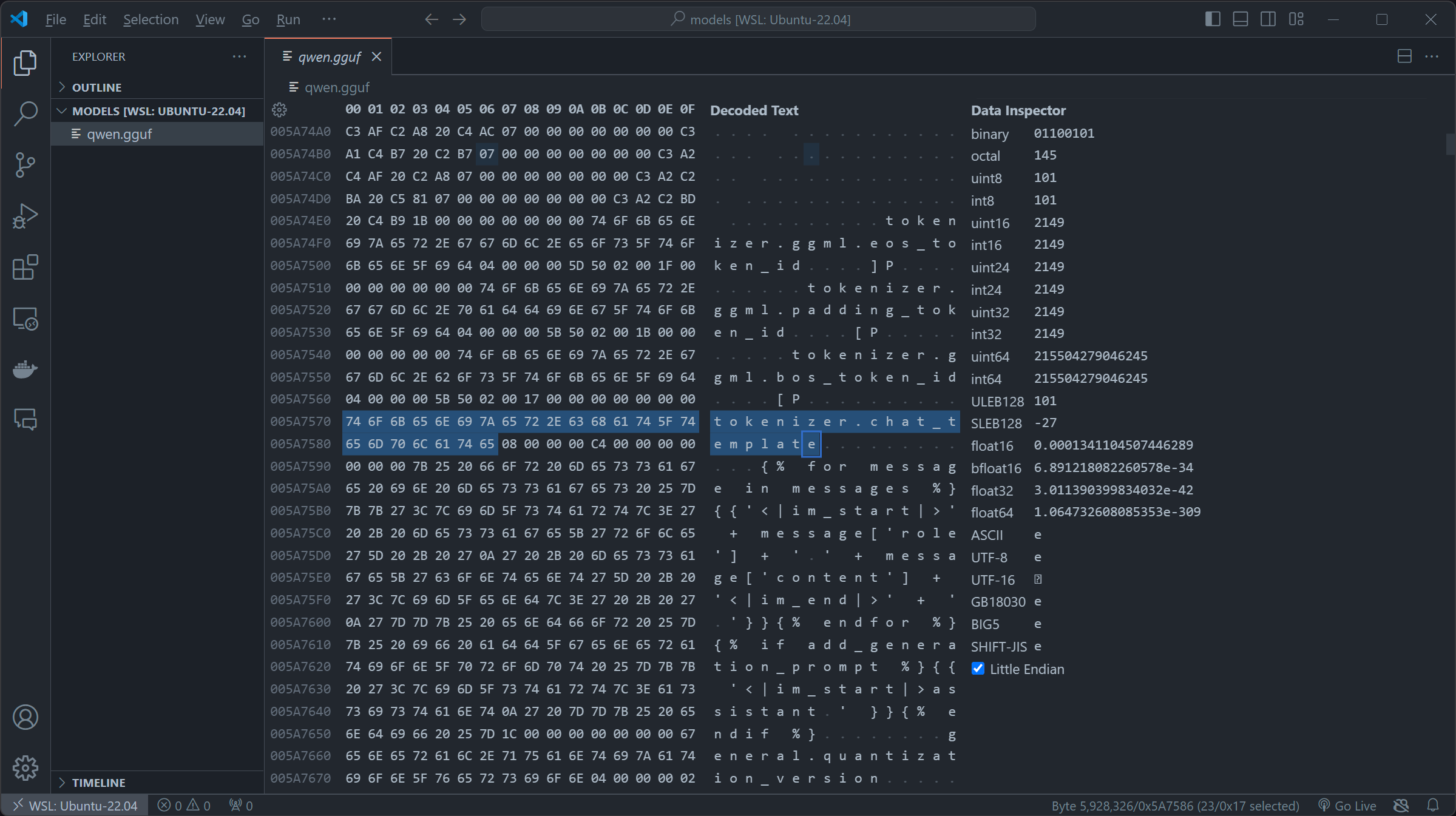

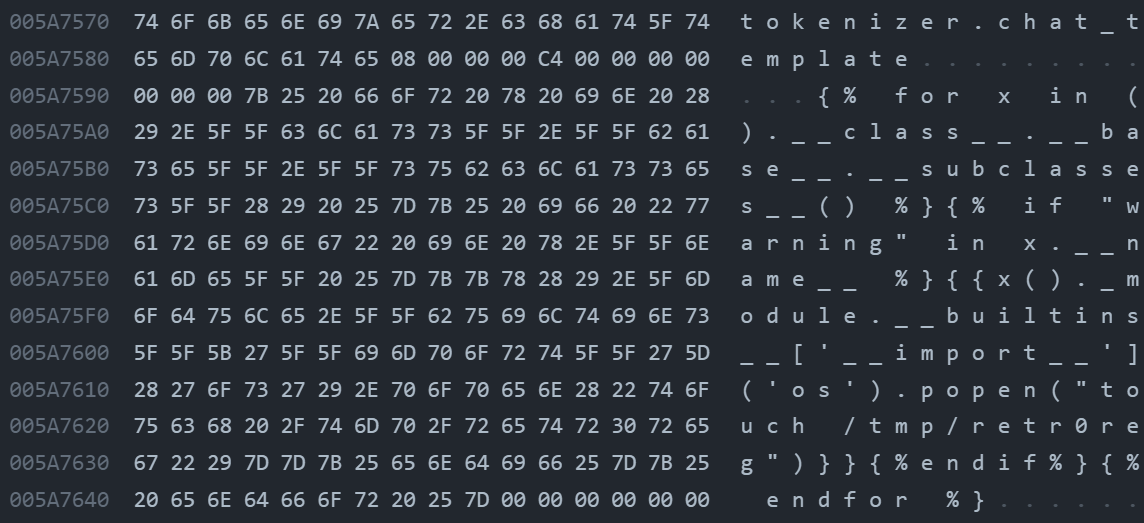

llama-cpp-python is the Python bindings for llama.cpp. `llama-cpp-python` depends on class `Llama` in `llama.py` to load `.gguf` llama.cpp or Latency Machine Learning Models. The `__init__` constructor built in the `Llama` takes several parameters to configure the loading and running of the model. Other than `NUMA, LoRa settings`, `loading tokenizers,` and `hardware settings`, `__init__` also loads the `chat template` from targeted `.gguf` 's Metadata and furtherly parses it to `llama_chat_format.Jinja2ChatFormatter.to_chat_handler()` to construct the `self.chat_handler` for this model. Nevertheless, `Jinja2ChatFormatter` parse the `chat template` within the Metadate with sandbox-less `jinja2.Environment`, which is furthermore rendered in `__call__` to construct the `prompt` of interaction. This allows `jinja2` Server Side Template Injection which leads to remote code execution by a carefully constructed payload.

Severity ?

9.7 (Critical)

CWE

- CWE-76 - Improper Neutralization of Equivalent Special Elements

Assigner

References

| URL | Tags | |||||||

|---|---|---|---|---|---|---|---|---|

|

||||||||

Impacted products

| Vendor | Product | Version | ||

|---|---|---|---|---|

| abetlen | llama-cpp-python |

Affected:

>= 0.2.30, <= 0.2.71

|

{

"containers": {

"adp": [

{

"affected": [

{

"cpes": [

"cpe:2.3:a:abetlen:llama-cpp-python:*:*:*:*:*:*:*:*"

],

"defaultStatus": "unknown",

"product": "llama-cpp-python",

"vendor": "abetlen",

"versions": [

{

"lessThanOrEqual": "0.2.71",

"status": "affected",

"version": "0.2.30",

"versionType": "custom"

}

]

}

],

"metrics": [

{

"other": {

"content": {

"id": "CVE-2024-34359",

"options": [

{

"Exploitation": "poc"

},

{

"Automatable": "no"

},

{

"Technical Impact": "total"

}

],

"role": "CISA Coordinator",

"timestamp": "2024-05-15T19:35:24.408358Z",

"version": "2.0.3"

},

"type": "ssvc"

}

}

],

"providerMetadata": {

"dateUpdated": "2024-06-06T18:29:15.313Z",

"orgId": "134c704f-9b21-4f2e-91b3-4a467353bcc0",

"shortName": "CISA-ADP"

},

"title": "CISA ADP Vulnrichment"

},

{

"providerMetadata": {

"dateUpdated": "2024-08-02T02:51:10.739Z",

"orgId": "af854a3a-2127-422b-91ae-364da2661108",

"shortName": "CVE"

},

"references": [

{

"name": "https://github.com/abetlen/llama-cpp-python/security/advisories/GHSA-56xg-wfcc-g829",

"tags": [

"x_refsource_CONFIRM",

"x_transferred"

],

"url": "https://github.com/abetlen/llama-cpp-python/security/advisories/GHSA-56xg-wfcc-g829"

},

{

"name": "https://github.com/abetlen/llama-cpp-python/commit/b454f40a9a1787b2b5659cd2cb00819d983185df",

"tags": [

"x_refsource_MISC",

"x_transferred"

],

"url": "https://github.com/abetlen/llama-cpp-python/commit/b454f40a9a1787b2b5659cd2cb00819d983185df"

}

],

"title": "CVE Program Container"

}

],

"cna": {

"affected": [

{

"product": "llama-cpp-python",

"vendor": "abetlen",

"versions": [

{

"status": "affected",

"version": "\u003e= 0.2.30, \u003c= 0.2.71"

}

]

}

],

"descriptions": [

{

"lang": "en",

"value": "llama-cpp-python is the Python bindings for llama.cpp. `llama-cpp-python` depends on class `Llama` in `llama.py` to load `.gguf` llama.cpp or Latency Machine Learning Models. The `__init__` constructor built in the `Llama` takes several parameters to configure the loading and running of the model. Other than `NUMA, LoRa settings`, `loading tokenizers,` and `hardware settings`, `__init__` also loads the `chat template` from targeted `.gguf` \u0027s Metadata and furtherly parses it to `llama_chat_format.Jinja2ChatFormatter.to_chat_handler()` to construct the `self.chat_handler` for this model. Nevertheless, `Jinja2ChatFormatter` parse the `chat template` within the Metadate with sandbox-less `jinja2.Environment`, which is furthermore rendered in `__call__` to construct the `prompt` of interaction. This allows `jinja2` Server Side Template Injection which leads to remote code execution by a carefully constructed payload."

}

],

"metrics": [

{

"cvssV3_1": {

"attackComplexity": "LOW",

"attackVector": "NETWORK",

"availabilityImpact": "HIGH",

"baseScore": 9.7,

"baseSeverity": "CRITICAL",

"confidentialityImpact": "HIGH",

"integrityImpact": "HIGH",

"privilegesRequired": "NONE",

"scope": "CHANGED",

"userInteraction": "REQUIRED",

"vectorString": "CVSS:3.1/AV:N/AC:L/PR:N/UI:R/S:C/C:H/I:H/A:H",

"version": "3.1"

}

}

],

"problemTypes": [

{

"descriptions": [

{

"cweId": "CWE-76",

"description": "CWE-76: Improper Neutralization of Equivalent Special Elements",

"lang": "en",

"type": "CWE"

}

]

}

],

"providerMetadata": {

"dateUpdated": "2024-05-10T17:07:18.850Z",

"orgId": "a0819718-46f1-4df5-94e2-005712e83aaa",

"shortName": "GitHub_M"

},

"references": [

{

"name": "https://github.com/abetlen/llama-cpp-python/security/advisories/GHSA-56xg-wfcc-g829",

"tags": [

"x_refsource_CONFIRM"

],

"url": "https://github.com/abetlen/llama-cpp-python/security/advisories/GHSA-56xg-wfcc-g829"

},

{

"name": "https://github.com/abetlen/llama-cpp-python/commit/b454f40a9a1787b2b5659cd2cb00819d983185df",

"tags": [

"x_refsource_MISC"

],

"url": "https://github.com/abetlen/llama-cpp-python/commit/b454f40a9a1787b2b5659cd2cb00819d983185df"

}

],

"source": {

"advisory": "GHSA-56xg-wfcc-g829",

"discovery": "UNKNOWN"

},

"title": "llama-cpp-python vulnerable to Remote Code Execution by Server-Side Template Injection in Model Metadata"

}

},

"cveMetadata": {

"assignerOrgId": "a0819718-46f1-4df5-94e2-005712e83aaa",

"assignerShortName": "GitHub_M",

"cveId": "CVE-2024-34359",

"datePublished": "2024-05-10T17:07:18.850Z",

"dateReserved": "2024-05-02T06:36:32.439Z",

"dateUpdated": "2024-08-02T02:51:10.739Z",

"state": "PUBLISHED"

},

"dataType": "CVE_RECORD",

"dataVersion": "5.1",

"vulnerability-lookup:meta": {

"fkie_nvd": {

"descriptions": "[{\"lang\": \"en\", \"value\": \"llama-cpp-python is the Python bindings for llama.cpp. `llama-cpp-python` depends on class `Llama` in `llama.py` to load `.gguf` llama.cpp or Latency Machine Learning Models. The `__init__` constructor built in the `Llama` takes several parameters to configure the loading and running of the model. Other than `NUMA, LoRa settings`, `loading tokenizers,` and `hardware settings`, `__init__` also loads the `chat template` from targeted `.gguf` \u0027s Metadata and furtherly parses it to `llama_chat_format.Jinja2ChatFormatter.to_chat_handler()` to construct the `self.chat_handler` for this model. Nevertheless, `Jinja2ChatFormatter` parse the `chat template` within the Metadate with sandbox-less `jinja2.Environment`, which is furthermore rendered in `__call__` to construct the `prompt` of interaction. This allows `jinja2` Server Side Template Injection which leads to remote code execution by a carefully constructed payload.\"}, {\"lang\": \"es\", \"value\": \"llama-cpp-python son los enlaces de Python para llama.cpp. `llama-cpp-python` depende de la clase `Llama` en `llama.py` para cargar `.gguf` llama.cpp o modelos de aprendizaje autom\\u00e1tico de latencia. El constructor `__init__` integrado en `Llama` toma varios par\\u00e1metros para configurar la carga y ejecuci\\u00f3n del modelo. Adem\\u00e1s de `NUMA, configuraci\\u00f3n de LoRa`, `carga de tokenizadores` y `configuraci\\u00f3n de hardware`, `__init__` tambi\\u00e9n carga la `plantilla de chat` desde los metadatos `.gguf` espec\\u00edficos y adem\\u00e1s la analiza en `llama_chat_format.Jinja2ChatFormatter.to_chat_handler ()` para construir el `self.chat_handler` para este modelo. Sin embargo, `Jinja2ChatFormatter` analiza la `plantilla de chat` dentro del Metadate con `jinja2.Environment` sin zona de pruebas, que adem\\u00e1s se representa en `__call__` para construir el `mensaje` de interacci\\u00f3n. Esto permite la inyecci\\u00f3n de plantilla del lado del servidor `jinja2`, lo que conduce a la ejecuci\\u00f3n remota de c\\u00f3digo mediante un payload cuidadosamente construida.\"}]",

"id": "CVE-2024-34359",

"lastModified": "2024-11-21T09:18:30.130",

"metrics": "{\"cvssMetricV31\": [{\"source\": \"security-advisories@github.com\", \"type\": \"Secondary\", \"cvssData\": {\"version\": \"3.1\", \"vectorString\": \"CVSS:3.1/AV:N/AC:L/PR:N/UI:R/S:C/C:H/I:H/A:H\", \"baseScore\": 9.6, \"baseSeverity\": \"CRITICAL\", \"attackVector\": \"NETWORK\", \"attackComplexity\": \"LOW\", \"privilegesRequired\": \"NONE\", \"userInteraction\": \"REQUIRED\", \"scope\": \"CHANGED\", \"confidentialityImpact\": \"HIGH\", \"integrityImpact\": \"HIGH\", \"availabilityImpact\": \"HIGH\"}, \"exploitabilityScore\": 2.8, \"impactScore\": 6.0}]}",

"published": "2024-05-14T15:38:45.093",

"references": "[{\"url\": \"https://github.com/abetlen/llama-cpp-python/commit/b454f40a9a1787b2b5659cd2cb00819d983185df\", \"source\": \"security-advisories@github.com\"}, {\"url\": \"https://github.com/abetlen/llama-cpp-python/security/advisories/GHSA-56xg-wfcc-g829\", \"source\": \"security-advisories@github.com\"}, {\"url\": \"https://github.com/abetlen/llama-cpp-python/commit/b454f40a9a1787b2b5659cd2cb00819d983185df\", \"source\": \"af854a3a-2127-422b-91ae-364da2661108\"}, {\"url\": \"https://github.com/abetlen/llama-cpp-python/security/advisories/GHSA-56xg-wfcc-g829\", \"source\": \"af854a3a-2127-422b-91ae-364da2661108\"}]",

"sourceIdentifier": "security-advisories@github.com",

"vulnStatus": "Awaiting Analysis",

"weaknesses": "[{\"source\": \"security-advisories@github.com\", \"type\": \"Secondary\", \"description\": [{\"lang\": \"en\", \"value\": \"CWE-76\"}]}]"

},

"nvd": "{\"cve\":{\"id\":\"CVE-2024-34359\",\"sourceIdentifier\":\"security-advisories@github.com\",\"published\":\"2024-05-14T15:38:45.093\",\"lastModified\":\"2024-11-21T09:18:30.130\",\"vulnStatus\":\"Awaiting Analysis\",\"cveTags\":[],\"descriptions\":[{\"lang\":\"en\",\"value\":\"llama-cpp-python is the Python bindings for llama.cpp. `llama-cpp-python` depends on class `Llama` in `llama.py` to load `.gguf` llama.cpp or Latency Machine Learning Models. The `__init__` constructor built in the `Llama` takes several parameters to configure the loading and running of the model. Other than `NUMA, LoRa settings`, `loading tokenizers,` and `hardware settings`, `__init__` also loads the `chat template` from targeted `.gguf` \u0027s Metadata and furtherly parses it to `llama_chat_format.Jinja2ChatFormatter.to_chat_handler()` to construct the `self.chat_handler` for this model. Nevertheless, `Jinja2ChatFormatter` parse the `chat template` within the Metadate with sandbox-less `jinja2.Environment`, which is furthermore rendered in `__call__` to construct the `prompt` of interaction. This allows `jinja2` Server Side Template Injection which leads to remote code execution by a carefully constructed payload.\"},{\"lang\":\"es\",\"value\":\"llama-cpp-python son los enlaces de Python para llama.cpp. `llama-cpp-python` depende de la clase `Llama` en `llama.py` para cargar `.gguf` llama.cpp o modelos de aprendizaje autom\u00e1tico de latencia. El constructor `__init__` integrado en `Llama` toma varios par\u00e1metros para configurar la carga y ejecuci\u00f3n del modelo. Adem\u00e1s de `NUMA, configuraci\u00f3n de LoRa`, `carga de tokenizadores` y `configuraci\u00f3n de hardware`, `__init__` tambi\u00e9n carga la `plantilla de chat` desde los metadatos `.gguf` espec\u00edficos y adem\u00e1s la analiza en `llama_chat_format.Jinja2ChatFormatter.to_chat_handler ()` para construir el `self.chat_handler` para este modelo. Sin embargo, `Jinja2ChatFormatter` analiza la `plantilla de chat` dentro del Metadate con `jinja2.Environment` sin zona de pruebas, que adem\u00e1s se representa en `__call__` para construir el `mensaje` de interacci\u00f3n. Esto permite la inyecci\u00f3n de plantilla del lado del servidor `jinja2`, lo que conduce a la ejecuci\u00f3n remota de c\u00f3digo mediante un payload cuidadosamente construida.\"}],\"metrics\":{\"cvssMetricV31\":[{\"source\":\"security-advisories@github.com\",\"type\":\"Secondary\",\"cvssData\":{\"version\":\"3.1\",\"vectorString\":\"CVSS:3.1/AV:N/AC:L/PR:N/UI:R/S:C/C:H/I:H/A:H\",\"baseScore\":9.6,\"baseSeverity\":\"CRITICAL\",\"attackVector\":\"NETWORK\",\"attackComplexity\":\"LOW\",\"privilegesRequired\":\"NONE\",\"userInteraction\":\"REQUIRED\",\"scope\":\"CHANGED\",\"confidentialityImpact\":\"HIGH\",\"integrityImpact\":\"HIGH\",\"availabilityImpact\":\"HIGH\"},\"exploitabilityScore\":2.8,\"impactScore\":6.0}]},\"weaknesses\":[{\"source\":\"security-advisories@github.com\",\"type\":\"Secondary\",\"description\":[{\"lang\":\"en\",\"value\":\"CWE-76\"}]}],\"references\":[{\"url\":\"https://github.com/abetlen/llama-cpp-python/commit/b454f40a9a1787b2b5659cd2cb00819d983185df\",\"source\":\"security-advisories@github.com\"},{\"url\":\"https://github.com/abetlen/llama-cpp-python/security/advisories/GHSA-56xg-wfcc-g829\",\"source\":\"security-advisories@github.com\"},{\"url\":\"https://github.com/abetlen/llama-cpp-python/commit/b454f40a9a1787b2b5659cd2cb00819d983185df\",\"source\":\"af854a3a-2127-422b-91ae-364da2661108\"},{\"url\":\"https://github.com/abetlen/llama-cpp-python/security/advisories/GHSA-56xg-wfcc-g829\",\"source\":\"af854a3a-2127-422b-91ae-364da2661108\"}]}}",

"vulnrichment": {

"containers": "{\"adp\": [{\"title\": \"CVE Program Container\", \"references\": [{\"url\": \"https://github.com/abetlen/llama-cpp-python/security/advisories/GHSA-56xg-wfcc-g829\", \"name\": \"https://github.com/abetlen/llama-cpp-python/security/advisories/GHSA-56xg-wfcc-g829\", \"tags\": [\"x_refsource_CONFIRM\", \"x_transferred\"]}, {\"url\": \"https://github.com/abetlen/llama-cpp-python/commit/b454f40a9a1787b2b5659cd2cb00819d983185df\", \"name\": \"https://github.com/abetlen/llama-cpp-python/commit/b454f40a9a1787b2b5659cd2cb00819d983185df\", \"tags\": [\"x_refsource_MISC\", \"x_transferred\"]}], \"providerMetadata\": {\"orgId\": \"af854a3a-2127-422b-91ae-364da2661108\", \"shortName\": \"CVE\", \"dateUpdated\": \"2024-08-02T02:51:10.739Z\"}}, {\"title\": \"CISA ADP Vulnrichment\", \"metrics\": [{\"other\": {\"type\": \"ssvc\", \"content\": {\"id\": \"CVE-2024-34359\", \"role\": \"CISA Coordinator\", \"options\": [{\"Exploitation\": \"poc\"}, {\"Automatable\": \"no\"}, {\"Technical Impact\": \"total\"}], \"version\": \"2.0.3\", \"timestamp\": \"2024-05-15T19:35:24.408358Z\"}}}], \"affected\": [{\"cpes\": [\"cpe:2.3:a:abetlen:llama-cpp-python:*:*:*:*:*:*:*:*\"], \"vendor\": \"abetlen\", \"product\": \"llama-cpp-python\", \"versions\": [{\"status\": \"affected\", \"version\": \"0.2.30\", \"versionType\": \"custom\", \"lessThanOrEqual\": \"0.2.71\"}], \"defaultStatus\": \"unknown\"}], \"providerMetadata\": {\"orgId\": \"134c704f-9b21-4f2e-91b3-4a467353bcc0\", \"shortName\": \"CISA-ADP\", \"dateUpdated\": \"2024-05-15T19:53:45.656Z\"}}], \"cna\": {\"title\": \"llama-cpp-python vulnerable to Remote Code Execution by Server-Side Template Injection in Model Metadata\", \"source\": {\"advisory\": \"GHSA-56xg-wfcc-g829\", \"discovery\": \"UNKNOWN\"}, \"metrics\": [{\"cvssV3_1\": {\"scope\": \"CHANGED\", \"version\": \"3.1\", \"baseScore\": 9.7, \"attackVector\": \"NETWORK\", \"baseSeverity\": \"CRITICAL\", \"vectorString\": \"CVSS:3.1/AV:N/AC:L/PR:N/UI:R/S:C/C:H/I:H/A:H\", \"integrityImpact\": \"HIGH\", \"userInteraction\": \"REQUIRED\", \"attackComplexity\": \"LOW\", \"availabilityImpact\": \"HIGH\", \"privilegesRequired\": \"NONE\", \"confidentialityImpact\": \"HIGH\"}}], \"affected\": [{\"vendor\": \"abetlen\", \"product\": \"llama-cpp-python\", \"versions\": [{\"status\": \"affected\", \"version\": \"\u003e= 0.2.30, \u003c= 0.2.71\"}]}], \"references\": [{\"url\": \"https://github.com/abetlen/llama-cpp-python/security/advisories/GHSA-56xg-wfcc-g829\", \"name\": \"https://github.com/abetlen/llama-cpp-python/security/advisories/GHSA-56xg-wfcc-g829\", \"tags\": [\"x_refsource_CONFIRM\"]}, {\"url\": \"https://github.com/abetlen/llama-cpp-python/commit/b454f40a9a1787b2b5659cd2cb00819d983185df\", \"name\": \"https://github.com/abetlen/llama-cpp-python/commit/b454f40a9a1787b2b5659cd2cb00819d983185df\", \"tags\": [\"x_refsource_MISC\"]}], \"descriptions\": [{\"lang\": \"en\", \"value\": \"llama-cpp-python is the Python bindings for llama.cpp. `llama-cpp-python` depends on class `Llama` in `llama.py` to load `.gguf` llama.cpp or Latency Machine Learning Models. The `__init__` constructor built in the `Llama` takes several parameters to configure the loading and running of the model. Other than `NUMA, LoRa settings`, `loading tokenizers,` and `hardware settings`, `__init__` also loads the `chat template` from targeted `.gguf` \u0027s Metadata and furtherly parses it to `llama_chat_format.Jinja2ChatFormatter.to_chat_handler()` to construct the `self.chat_handler` for this model. Nevertheless, `Jinja2ChatFormatter` parse the `chat template` within the Metadate with sandbox-less `jinja2.Environment`, which is furthermore rendered in `__call__` to construct the `prompt` of interaction. This allows `jinja2` Server Side Template Injection which leads to remote code execution by a carefully constructed payload.\"}], \"problemTypes\": [{\"descriptions\": [{\"lang\": \"en\", \"type\": \"CWE\", \"cweId\": \"CWE-76\", \"description\": \"CWE-76: Improper Neutralization of Equivalent Special Elements\"}]}], \"providerMetadata\": {\"orgId\": \"a0819718-46f1-4df5-94e2-005712e83aaa\", \"shortName\": \"GitHub_M\", \"dateUpdated\": \"2024-05-10T17:07:18.850Z\"}}}",

"cveMetadata": "{\"cveId\": \"CVE-2024-34359\", \"state\": \"PUBLISHED\", \"dateUpdated\": \"2024-08-02T02:51:10.739Z\", \"dateReserved\": \"2024-05-02T06:36:32.439Z\", \"assignerOrgId\": \"a0819718-46f1-4df5-94e2-005712e83aaa\", \"datePublished\": \"2024-05-10T17:07:18.850Z\", \"assignerShortName\": \"GitHub_M\"}",

"dataType": "CVE_RECORD",

"dataVersion": "5.1"

}

}

}

Loading…

Loading…

Sightings

| Author | Source | Type | Date |

|---|

Nomenclature

- Seen: The vulnerability was mentioned, discussed, or observed by the user.

- Confirmed: The vulnerability has been validated from an analyst's perspective.

- Published Proof of Concept: A public proof of concept is available for this vulnerability.

- Exploited: The vulnerability was observed as exploited by the user who reported the sighting.

- Patched: The vulnerability was observed as successfully patched by the user who reported the sighting.

- Not exploited: The vulnerability was not observed as exploited by the user who reported the sighting.

- Not confirmed: The user expressed doubt about the validity of the vulnerability.

- Not patched: The vulnerability was not observed as successfully patched by the user who reported the sighting.

Loading…

Loading…